Click each project title to expand the project details.

SAIL: MACHINE LEARNING GUIDED STRUCTURAL ANALYSIS ATTACK ON HARDWARE OBFUSCATION

Nelms Institute Contact: Swarup Bhunia

Obfuscation is a technique for protecting hardware intellectual property (IP) blocks against reverse engineering, piracy, and malicious modifications. Current obfuscation efforts mainly focus on functional locking of a design to prevent black-box usage. They do not directly address hiding design intent through structural transformations, which is an important objective of obfuscation. We note that current obfuscation techniques incorporate only: (1) local, and (2) predictable changes in circuit topology. In this paper, we present SAIL, a structural attack on obfuscation using machine learning (ML) models that exposes a critical vulnerability of these methods. Through this attack, we demonstrate that the gate-level structure of an obfuscated design can be retrieved in most parts through a systematic set of steps. The proposed attack is applicable to all forms of logic obfuscation, and significantly more powerful than existing attacks, e.g., SAT-based attacks, since it does not require the availability of golden functional responses (e.g., an unlocked IC). Evaluation on benchmark circuits show that we can recover an average of about 84% (up to 95%) transformations introduced by obfuscation. We also show that this attack is scalable, flexible, and versatile.

MAGIC: MACHINE-LEARNING-GUIDED IMAGE COMPRESSION FOR VISION APPLICATIONS IN INTERNET OF THINGS

Nelms Institute Contact: Swarup Bhunia

The emergent ecosystems of intelligent edge devices in diverse Internet-of-Things (IoT) applications, from automatic surveillance to precision agriculture, increasingly rely on recording and processing a variety of image data. Due to resource constraints, e.g., energy and communication bandwidth requirements, these applications require compressing the recorded images before transmission. For these applications, image compression commonly requires: 1) maintaining features for coarse-grain pattern recognition instead of the high-level details for human perception due to machine-to-machine communications; 2) high compression ratio that leads to improved energy and transmission efficiency; and 3) large dynamic range of compression and an easy tradeoff between compression factor and quality of reconstruction to accommodate a wide diversity of IoT applications as well as their time-varying energy/performance needs. To address these requirements, we propose, MAGIC, a novel machine learning (ML)-guided image compression framework that judiciously sacrifices the visual quality to achieve much higher compression when compared to traditional techniques, while maintaining accuracy for coarse-grained vision tasks. The central idea is to capture application-specific domain knowledge and efficiently utilize it in achieving high compression. We demonstrate that the MAGIC framework is configurable across a wide range of compression/quality and is capable of compressing beyond the standard quality factor limits of both JPEG 2000 and WebP. We perform experiments on representative IoT applications using two vision data sets and show 42.65× compression at similar accuracy with respect to the source. We highlight low variance in compression rate across images using our technique as compared to JPEG 2000 and WebP.

SAINT – SELF-AWARE INFRASTRUCTURE WITH INTELLIGENT TECHNOLOGIES

Nelms Institute Contact: Swarup Bhunia

This work is about a coordinated monitoring and response to an emergency situation involving a structural complex as a way to supplement traditional emergency response. This technology uses a series of monitoring units, such as environmental sensors, for an anomaly detection scheme which creates a model of the infrastructure’s normal behavior that can be compared against a database of threats. In addition to these units, a fleet of response units includes actuator and/or a fleet of first-responder entities which implement a crowd control protocol and hierarchical staged response prescribed by a central control system. This framework incorporates an infrastructure with a brain, a “self-awareness” for an emergency situation, which can house both the central control system and, if available, first-responder entities. Emergency situations include, but are not limited to, fires and criminal activities. The central control system handles the high-level decision making from collecting data to launching first-responder entities in a systematic manner called staged response. In staged response, the central control system will monitor the infrastructure for any suspicious events in and around it. When an event triggers a plurality of sensors, the central control system will launch one or more first-responder entities to the scene to confirm the threat. The central control system will coordinate with monitoring, response, and hybrid mobile units to make decisions that mitigate and communicate the threat to the appropriate agencies. These units can also make autonomous decisions in the absence of the CCS based on the concept of self-organizing behavior using device-to-device (D2D) technology. The hybrid mobile unit is equipped with several sensors, including but not limited to sound sensors, cameras, short range radar and LIDAR (Light Detection and Ranging). The sensor and actuator networks will collect data from the environmental sensors and control doors, windows, and other actuators within the infrastructure based on commands from the central control system, respectively. A specific incarnation of this framework will include an array of sensors that collectively monitors diverse situations and provides collective intelligence to respond to these situations appropriately, e.g., closing/opening doors/windows. These autonomous entities will detect safety issues and violations such as fire and criminal activities like active shooter and theft. They will respond according to a set of distributed intelligent algorithms.

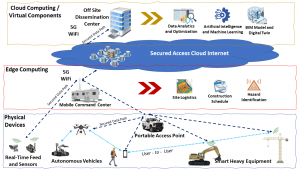

ELEMENTS: CYBERINFRASTRUCTURE SERVICE FOR IOT-BASED CONSTRUCTION RESEARCH AND APPLICATIONS

Nelms Institute Contact: Aaron Costin

This project develops a robust cyberinfrastructure (CI) system and service for construction research and applications to address the current challenges faced in the construction industry. The outcomes and services that this project aims to provide are 1) a distributed SDN-managed and AI-assisted IoT based system that can be adapted and extended based on needs of the research and application; 2) identification of the data and data security requirements needed to address the challenges in the construction industry and potential technologies that can provide those data; 3) evaluation of reliable realtime multi-sensor fusion techniques for ruggedness, usability, and limitations of IoT-based components deployed in the dynamic construction environments; 4) robust prototype system for real-time safety monitoring based on the IoT system framework; and 5) recommendations of potential configurations of the system with the appropriate technology and sensors to meet the needs of the application. The system is named IoT-ACRES, short for IoT-Applied Construction Research and Education Services.

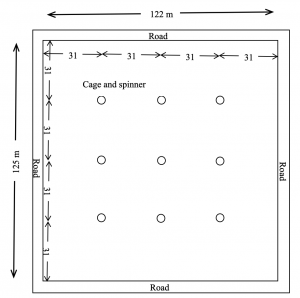

SMART BIO-ASSAY CAGE DEVELOPMENT FOR EVALUATION OF EFFICACY OF MOSQUITO CONTROL ADULTICIDES

Nelms Institute Contact: William Eisenstadt

The proposed work will develop a smart sensor and bioassay cage prototypes that will be evaluated in a field environment to demon- strate that the developed products can measure the efficacy of the mosquito adulticide application. This work is unique in that it enables the research collaboration between the entomologists, biologists from Anastasia Mosquito Control District (AMCD) and electronics and sensor experts (University of Florida, Dept of ECE). The work will develop a prototype smart mosquito sensor and bioassay cage that, 1) evaluates the properties of insect spray aerosols, 2) records environmental information at the time of spraying such as temperature, humidity and location, and 3) reports the information wirelessly to a cell phone or a computer. The work will compare the newly developed smart bioassay cage to existing techniques for mosquito cage spray evaluation and build a cross-reference guide.

VCA-DNN: NEUROSCIENCE-INSPIRED ARTIFICIAL INTELLIGENCE FOR VISUAL EMOTION RECOGNITION

Nelms Institute Contact: Ruogu Fang

Human emotions are dynamic, multidimensional responses to challenges and opportunities, which emerge from network interactions in the brain. Disruptions of these network interactions underlie emotional dysregulation in many mental disorders including anxiety and depression. In the process of carrying out our current NIH funded research on how human brain processes emotional information, we recognize the limitation of empirical studies, including not being able to manipulate the system to establish the causal basis for the observed relationship between brain and behavior. Creating an AI-based model system that is informed and validated by known biological findings and that can be used to carry out causal manipulations and allow the testing of the consequences against human imaging data will thus be a highly significant development in the short term. In the long term, the model can be further enriched and expanded so that it becomes a platform for testing a wider range of normal brain functions, as well as a platform for testing for how various pathologies affect these functions in mental disorders.

WEB-BASED AUTOMATED IMAGING DIFFERENTIATION OF PARKINSONISM

Nelms Institute Contact: Ruogu Fang

The three distinct neurodegenerative disorders — Parkinson’s disease; multiple system atrophy Parkinsonian variant, or MSAp; and progressive supranuclear palsy, or PSP — can be difficult to differentiate because they share overlapping motor and non-motor features, such as changes in gait. But they also have important differences in pathology and prognosis, and obtaining an accurate diagnosis is key to determining the best possible treatment for patients as well as developing improved therapies of the future. Previous research has shown that accuracy of diagnosis in early Parkinson’s can be as low as 58%, and more than half of misdiagnosed patients actually have one of the two variants. Testing of the new AI tool, which will include MRI images from 315 patients at 21 sites across North America, builds upon more than a decade of research in the laboratory of David Vaillancourt, Ph.D., a professor and chair of the UF College of Health & Human Performance’s department of applied physiology and kinesiology, whose work is focused on improving the lives of more than 6 million people with Parkinson’s disease and Parkinson’s-like syndromes. To differentiate between the forms of Parkinsonism, Vaillancourt’s lab has developed a novel, noninvasive biomarker technique using diffusion-weighted MRI, which measures how water molecules diffuse in the brain and helps identify where neurodegeneration is occurring. Vaillancourt’s team demonstrated the effectiveness of the technique in an international, 1,002- patient study published in The Lancet Digital Health in 2019.

REACTIVE SWARM CONTROL FOR DYNAMIC ENVIRONMENTS

Nelms Institute Contact: Matthew Hale

This project will develop novel decentralized feedback optimization strategies that use an online optimization algorithm as a controller to maneuver swarms in real time. This methodology will eliminate the need to predict future conditions, which typically cannot be done in unknown and adversarial environments, and its theoretical developments will be complemented by outdoor experiments to validate success.

AUTOMATICALLY VALIDATING SOC FIRMWARE THROUGH MACHINE LEARNING AND CONCOLIC TESTING

Nelms Institute Contact: Sandip Ray

Recent years have seen a dramatic increase in the size and complexity of firmware in both custom and SoC designs. Unlike custom hardware, a system functionality implemented through firmware can be updated after deployment in response to bugs, performance limitations, or security vulnerabilities discovered in-field, or for the purpose of adapting to changing requirements. Today, it is common to find more than a dozen hardware blocks (also referred to as “intellectual properties” or “IPs”) in a commercial SoC design implementing significant functionality through firmware that execute on diverse microcontrollers with different instruction set architectures, e.g., IA32, ARMTM, 8051, etc., as well as proprietary custom instruction set. Firmware implementations are particularly common for many core algorithms in machine learning systems, since these algorithms typically need to be adapted and updated in-field in response to evolving data patterns, workloads, and use cases throughout the lifetime of the system. Given this extensive use, it is getting increasingly crucial to ensure that the firmware implementations are functionally correct, robust against various microarchitectural side channels, and protected against malicious security exploitation. The goal of this project is to develop an automated framework for dynamic firmware validation that enables exploration of subtle hardware/firmware interactions while maintaining the scalability and performance. Our approach exploits some key insights from formal techniques to achieve scalability in test generation. It involves innovative coordination of two components: (1) a configurable, plug-and-play Virtual Platform (VP) architecture that enables disciplined, on-the- fly selective refinement of hardware modules from VP to RTL; and (2) a concolic test generation framework that combines symbolic analysis with machine learning for targeted exploration of firmware paths that have a high probability of exhibiting errors and vulnerabilities (if they exist). The outcome is a comprehensive, automated methodology for firmware analysis that (1) can be employed early in the system exploration life-cycle, (2) accounts for the interaction of the firmware with the underlying hardware and other IPs, and (3) enables focused, targeted exploration of firmware code to identify functional error conditions and security vulnerabilities.

ARTIFICIAL NEURAL NETWORKS MEET BIOLOGICAL NEURAL NETWORKS: DESIGNING PERSONALIZED STIMULATION FOR THE DATA-DRIVEN CONTROL OF NEURAL DYNAMICS

Nelms Institute Contact: Shreya Saxena

Personalized neurostimulation using data-driven models has enormous potential to restore neural activity towards health. However, the inference of individualized high- dimensional dynamical models from data remains challenging due to their under-constrained nature. Moreover, the design of stimulation strategies requires exploration of extremely large parameter spaces. To address these issues, we will leverage the wealth of data that multi-subject experiments provide, as well as the computational resources newly available at UF. We will develop new AI methods that utilize in-vivo neural responses to design and implement personalized stimulation in real-time. These will be developed using functional Magnetic Resonance Imaging (fMRI) datasets collected in-house to examine neural activity related to memory / cognition. We will (Aim 1) build recurrent neural networks of memory-related neural activity, and (Aim 2) design personalized brain stimulation to achieve a memory-enhanced neural response. Promising stimulation strategies will be validated in-silico on multiple datasets and finally in-vivo using an fMRI-compatible neural probe.

SHF: SMALL: ENABLING NEW MACHINE-LEARNING USAGE SCENARIOS WITH SOFTWARE-DEFINED HARDWARE FOR SYMBOLIC REGRESSION

Nelms Institute Contact: Gregory Stitt

Despite the widespread success of machine learning, existing techniques have limitations and/ or unattractive trade-offs that prohibit important usage scenarios, particularly in embedded and real-time systems. For example, artificial neural nets provide sufficient accuracy for many applications, but can be too computationally expensive for embedded usage and may require large training data sets that are impractical to collect for some applications. Even when executed with cloud computing, neural nets often require graphics-processing unit acceleration, which greatly increases power costs that can already dominate the total cost of ownership in large-scale data centers and supercomputers. Similarly, linear regression is a widely used machine-learning technique, but generally requires model specification or guidance by the user, which is prohibitive for difficult-to-understand phenomena and/or many-dimensional problems. This project shows that symbolic regression complements existing machine-learning techniques by providing attractive Pareto-optimal trade-offs that enable new machine-learning usage scenarios where existing technologies are prohibitive. These symbolic-regression benefits come from three key advantages: 1) automatic model discovery, 2) computational efficiency with minimal loss in capability compared to existing techniques, and 3) lower sensitivity to training set size. Despite being studied for decades, symbolic regression is generally limited to toy examples due to the challenge of searching an infinite solution space with numerous local optima. This project presents a solution that significantly advances the state-of-the-art via two primary contributions: 1) 1,000,000x acceleration of the symbolic-regression exploration process, and 2) fundamentally new exploration algorithms that are only possible with such significant acceleration. To accelerate the symbolic-regression exploration process, the investigators introduce software-defined hardware that re-configures every cycle to provide a solution-specific pipeline implemented as a virtual hardware overlay on field-programmable gate arrays. Although this acceleration by itself improves upon the state-of-the-art in symbolic regression considerably, the more important contribution is the enabling of new exploration algorithms that are not feasible without massive increases in performance. The investigators use this performance improvement to introduce a new hybrid exploration algorithm that performs multiple concurrent searches using different configurations of genetic programming and deterministic heuristics, combined with two new prediction mechanisms to avoid local optima: sub-tree look-ahead prediction and operator correlation.

WHEN ADVERSARIAL LEARNING MEETS DIFFERENTIAL PRIVACY: THEORETICAL FOUNDATION AND APPLICATIONS

Nelms Institute Contact: My T. Thai

The pervasiveness of machine learning exposes new and severe vulnerabilities in software systems, where deployed deep neural networks can be exploited to reveal sensitive information in private training data, and to make the models misclassify. In field trials, such lack of protection and efficacy significantly degrades the performance of machine learning-based systems, and puts sensitive data at high risk, thereby exposing service providers to legal action based on HIPAA/ HITECH law and related regulations. This project aims to develop the first framework to advance and seamlessly integrate key techniques, including adversarial learning, privacy preserving, and certified defenses, offering tight and reliable protection against both privacy and integrity attacks, while retaining high model utility in deep neural networks. The system is being developed for scalable, complex, and commonly used machine learning frameworks, providing a fundamental impact to both industry and educational environments.

DEEPTRUST: BUILDING COMPETENCY-AWARE AI SYSTEMS WITH HUMAN CENTRIC COMMUNICATION

Nelms Institute Contact: My T. Thai

This project aims to develop a machine learning (ML) system that combats visual misinformation such as fake/altered photos in a news context through trust-building machine-human interactions. Specifically, the project will focus on building human trust of the news diagnostics from the machine learning system through reported competency-based self-assessment and human centric explanations of the visual diagnostics. The research methods will entail: 1) an adaptive ML system that can self-assess the performance level of any classifier, with a provable guarantee, 2) an explainer that is robust to adversarial attacks and in a human-understandable, trusted form, and 3) method to assess best human-machine communication approaches in news consumption and the communication preferences of news consumers for such explainers.

FAI: TOWARDS A COMPUTATIONAL FOUNDATION FOR FAIR NETWORK LEARNING

Nelms Institute Contact: My T. Thai

Network learning and mining plays a pivotal role across a number of disciplines, such as computer science, physics, social science, management, neural science, civil engineering, and e-commerce. Decades of research in this area has provided a wealth of theories, algorithms and open-source systems to answer who/what types of questions. Despite the remarkable progress in network learning, a fundamental question largely remains nascent: how can we make network learning results and process explainable, transparent, and fair? The answer to this question benefits a variety of high-impact network learning based applications in terms of their interpretability, transparency and fairness, including social network analysis, neural science, team science and management, intelligent transportation systems, critical infrastructures, and blockchain networks. This project takes a shift for network learning, from answering who and what to answering how and why. It develops computational theories, algorithms and prototype systems in the context of network learning, forming three key pillars (interpretation, auditing, de-biasing) of fair network learning.

FORECASTING TRAJECTORIES OF HIV TRANSMISSION

Nelms Institute Contact: Dapeng Oliver Wu

Despite the advent of combined antiretroviral therapy, the ongoing HIV epidemic still defies prevention and intervention strategies designed to reduce significantly both prevalence and incidence worldwide. Phylodynamic analysis has extensively been used in the HIV field to track the origin and reconstruct the virus demographic history both at local, regional and global level. However, such studies have been so far only retrospective, with little or no power to make predictions about future epidemic trends. The overarching goal of the project is to develop an innovative computational framework coupling phylodynamic inference and behavioral network data with artificial intelligence algorithms capable of predicting HIV transmission clusters future trajectory, and informing on key determinants of new infections. We will achieve this goal by carrying out three specific aims: 1. Develop a phylodynamic-based PRIDE module to forecast HIV infection hotspots [the infected]; 2. Develop a behavioral network-based PRIDE module for risk of HIV infection [the uninfected], and 3. Carry out focus groups for deploying the new PRIDE forecasting technology into public health, and implement prevention through the peer change agent model.

I/UCRC CENTER FOR BIG LEARNING (NSF)

Nelms Institute Contact: Dapeng Oliver Wu

The Mission and Vision of the NSF I/UCRC Center for Big Learning (BigLearning) is to create a multi-site multi-disciplinary center that explores research frontiers in emerging large-scale deep learning and machine learning for a broad spectrum of big data applications, designs novel mobile and cloud services and big data ecosystems to enable big learning research and applications, transfers research discoveries to meet urgent needs in industry with our diverse center members, and nurtures our next generation talents in a mixed forward-looking setting with real-world relevance and significance by the industry-university consortium. The center focuses on emerging large-scale deep learning and machine learning, enabling cloud and big data services, products, and applications in aerospace, ecommerce, unmanned vehicles, precision medicine, mobile intelligence, recommender systems, social robots, surveillance, and ultimate in machine intelligence.

LIGHTWEIGHT ADAPTIVE ALGORITHMS FOR NETWORK OPTIMIZATION AT SCALE TOWARDS EMERGING SERVICES

Nelms Institute Contact: My T. Thai

The era of cloud computing transformed how information is delivered. With devices becoming “smarter” and “plugged” into the Internet, we are entering into a new era of “Internet of Things” (IoT) and cyber-physical systems. These requirements call for scalable and intelligent network algorithms for controlling and coordinating various network components and managing and optimizing resource allocations. This project puts forth a three-plane view of networking as a conceptual framework to structure network functions and guide us in the network algorithmic designs for timely, resilient and resource-efficient information delivery: 1) an information plane capturing application semantics and requirements; 2) a (logically) centralized control plane; and 3) a distributed (programmable) communication (data) plane. This project postulates two design principles and challenges in network algorithms: a) the need for co-design of centralized and distributed network algorithms and b) the need for just-in-time (near) optimality.

STREAM-BASED ACTIVE MINING AT SCALE: NON-LINEAR NON-SUBMODULAR MAXIMIZATION

Nelms Institute Contact: My T. Thai

The past decades have witnessed enormous transformations of intelligent data analysis in the realm of datasets at an unprecedented scale. Analysis of big data is computationally demanding, resource hungry, and much more complex. With recent emerging applications, most of the studied objective functions have been shown to be non-submodular or non-linear. Additionally, with the presence of dynamics in billion-scale datasets, such as items are arriving in an online fashion, scalable and stream-based adaptive algorithms which can quickly update solutions instead of recalculating from scratch must be investigated. All of the aforementioned issues call for a scalable and stream-based active mining techniques to cope with enormous applications of non-submodular maximization in the era of big data. This project develops a theoretical framework together with highly scalable approximation algorithms and tight theoretical performance bound guarantees for the class of non-submodular and non-linear optimization. In particular, the project lays the foundation for the novel data mining techniques, suitable to the new era of big data with emerging applications, as well as advance the research front of stochastic and stream-based algorithm designs.

AI ENHANCED SIDE CHANNEL ANALYSIS

Nelms Institute Contact: Shuo Wang

Side channel Analysis (SCA) is the process of analyzing unintentional signals caused by running operations in hardware, such as power draw and electromagnetic emanations, for information about the operations being performed. SCA can consistently recover confidential information from algorithms run on microprocessors. Machine, and in particular Deep, Learning greatly expand the threat SCA poses. These techniques allow SCA to be conducted with fewer measurements, and to be successful even when deployed against traditional countermeasures. Transfer learning also allows them to be easily adapted to new targets. Understanding the capabilities of Deep Learning for Side Channel Analysis is an integral step in securing microprocessors and reconfigurable circuits, especially those used in IoT applications, from SCA.

DARLING – DRONE-BASED ADMINISTRATION OF REMOTELY LOCATED INSTRUMENTS AND GEARS

Nelms Institute Contact: Swarup Bhunia

This work is about a framework for assisting remote devices using an Unmanned Aerial Vehicle (UAV). The DARLING architecture has three hardware components: the UAV, a base station which is responsible for housing the UAV, and remote devices which the UAV services. We describe all the parts needed for the base station and the UAVs in order to carry out a smooth, remote maintenance procedure. Whenever it is unsafe for the UAV to land near the remote device, we propose the use of a Detachable Charging Unit (DCU) which has a platform that carries a battery pack and connects to the remote device for charging while the UAV services another device. In this invention, we outline three types of maintenance based on schedule or unexpected issues discovered by AI models in both the base station and the UAVs. Most maintenance are what we call regular maintenance, where standard servicing procedures occur, such as charging and data transfer. DARLING can also anticipate future issues on the base station based on the collected diagnostic information from the remote device via the UAV through predictive maintenance. If the UAV predicts or discovers an issue on the device before performing maintenance, it can perform targeted maintenance on this device. We consider two distinct use cases—a) an IoT device that is in a remote location and b) an IoT device that is in an inconvenient location—and demonstrate the role of DARLING in these scenarios.

COLLABORATIVE RESEARCH: SHF: MEDIUM: HETEROGENEOUS ARCHITECTURE FOR COLLABORATIVE MACHINE LEARNING

Nelms Institute Contact: Dapeng Oliver Wu

The recent breakthrough of on-device machine learning with specialized artificial-intelligence hardware brings machine intelligence closer to individual devices. To leverage the power of the crowd, collaborative machine learning makes it possible to build up machine-learning models based on datasets that are distributed across multiple devices while preventing data leakage. However, most existing efforts are focused on homogeneous devices; given the widespread yet heterogeneous participants in practice, it is urgently important but challenging to manage immense heterogeneity. The research team develops heterogeneous architectures for collaborative machine learning to achieve three objectives under heterogeneity: efficiency, adaptivity, and privacy. The proposed heterogeneous architecture for collaborative machine learning is bringing tangible benefits for a wide range of disciplines that employ artificial intelligence technologies, such as healthcare, precision medicine, cyber physical systems, and education. The research findings of this project are intended to be integrated with the existing courses and K-12 programs. Furthermore, the research team is actively engaged in activities that encourage students from underrepresented groups to participate in computer science and engineering research.